Here is a list of useful unix commands or code parts. Who does not know it? You have a problem and looking for a solution where you find at stack overflow or similar pages? Here I collect all the commands that I have encountered over time or whose switch I simply can not remember (or want).

- How do I find all files containing specific text?

grep -rnw '/path/to/somewhere/' -e 'pattern'

- How i change the default file permissions (mask that controls file permissions)

umask

- Untar (unzip) file/folder

tar -zxvf archive.tar.gz

tar -cvzf archive.tar.gz file1 file2

- Copy files via rsync from one host to another

rsync -avz [USER@]HOST:SOURCE [USER@]HOST:DEST

rsync -avz [USER@]HOST:SOURCE rsync://[USER@]HOST[:PORT]/DEST

rsync -avz -e "ssh -p 12345" LOCAL/SOURCE [USER@]HOST:DEST

- Using rsync with sudo on the destination machine

- Find out the path to rsync:

which rsync

- Edit the /etc/sudoers file:

sudo visudo

- Add the line

<username> ALL=NOPASSWD:<path to rsync>, where username is the login name of the user that rsync will use to log on. That user must be able to use sudo

Then, on the source machine, specify that sudo rsync shall be used:

rsync -avz --rsync-path="sudo rsync" SOURCE [USER@]HOST:DEST

- Preserve SSH_AUTH_SOCK (Environment Variables) When Using sudo

sudo --preserve-env=SSH_AUTH_SOCK -s

- nslookup missing? Install dig

sudo apt-get install dnsutils

- find without "Permission denied"

find / -name 'filename.ext' 2>&1 | grep -v "Permission denied"

sudo systemd-resolve --flush-caches

netstat -tulpn

du -sh /var

du -shc /var/*

du -h --max-depth=1 /var

du -sh /var/lib/docker/containers/*/*.log

- Search multiple PDF files for a "needle"

pdfgrep -i needle haystack*.pdf

- Show hidden files with ls

ls -lar

- Redirect STDOUT and STDERR to a file

nice-command > out.txt 2>&1

- Installs your SSH public key to a remote host

sh-copy-id 'user@remotehost'

- A command-line system information tool

neofetch

- Show disk usage, folder size, items per folder, find big directorys, ... with ncdu

ncdu

iftop or iptraf

docker system prune --help

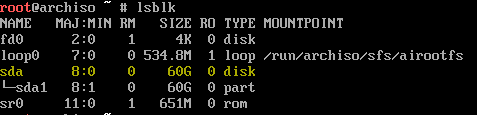

- Find and repair disk errors on ext (ext2, ext3 and ext4) filesystems

sudo e2fsck -f </dev/sda2>